NETWORKING

Question 1: (a) What are the main differences between connectionless and connection oriented communication?

(b) What are the essential differences between packet switching and message switching? Explain with the help of a suitable diagram.

Question 2: A system has 𝑛-layer protocol hierarchy. Applications generate message of length M bytes. At each of the layers, an h byte header is added. What fraction of the network bandwidth is filled with headers?

Question 3: State the Nyquist theorem. For what kind of physical medium it is applicable? Will it work for a noisy Channel?

Question 4: Define the throughput expressions of Aloha and Slotted Aloha. Also draw throughput Vs load graphs for the above protocols.

Question 5: (a) Explain hidden station and exposed station problems in WLAN protocols with the help of an illustration. What is the limitation of CSMA protocol in resolving the above problems? Explain the use of virtual channel sensing method as a proposed solution.

(b) Sketch the differential Manchester encoding for the bit stream: 0011111010111. Assume the line is initially in the low state.

Question 6: Draw the Ethernet frame format and explain its fields. Is there any limitation on a maximum and minimum frame size of Ethernet frame? Explain.

Question 7: How does the Border Gateway. Protocol work? Explain it with the help of a diagram. How does it resolve the count to infinity problem that is caused by other distance vector routing-algorithms?

Question 8: Draw the header format of TCP and explain the followings fields: ACK bit, RST bit & PSH bit, and Flags.

Question 9: Explain the window management scheme in TCP through an illustration.

Question 10: Discuss the Silly Window Syndrome which cause degradation of TCP performance. What is the proposed solution? Explain.

Question 11: Explain the terms: bit rate, baud rate and bandwidth with the help of an example. Also draw modulation schemes for the followings: (i) QPSK (ii) QAM-16 and describe.

Question 12: What are the differences between leaky bucket and token bucket algorithm? How is token bucket algorithm is implemented? Explain.

Question 13: How does DES work? Explain.

SOLVED ANSWER

Ans1:(a)

Connectionless communication-

In this type of communication there is n need to establish connection between sender and receiver

Less REliable

It is not possible to resend the data

It require far less overhead than connection oriented communication

Connection oriented communication-

In this type of communication connection must establish before data transfer

More reliable then connection less

Information can be-resend if there is any error in receiver side

It can have higher overhead

que(1)(b) -

This page packet switching vs message switching covers difference between packet switching and message switching. The difference between circuit switching and packet switching link is also mentioned.

Before we dig into packet switching vs message switching, I suggest one to go through circuit switching vs packet switching. Let us take example of data communication between source machine and destination machine with 3 routers in between. There may be more number of routers between thempacket switching

Packet switching is basically a special case of message switching type. After advancement of computer communication and networking, packet switching came into existence.

In packet switching, the message is broken into smaller pieces. This will allow each router to start transmission as soon as first packet of message has been arrived. This will save enormous amount of time, especially when no. of hops between source and destination are more. Hence propagation delay is more

Message switching overhead is lower compare to packet switching. Fig-3 depicts that single datagram is transmitted in message switching. As mentioned, message is appended with header before transmission. In packet switching message is divided into smaller packets amd each packet is appended with header before transmission.

Overhead in message switching = header/(header+message)

Overhead in packet switching = [n*header/(n*header+message)],

Where, n = [message/packet_size]Que(5)(a)

The Hidden Node Problems -

•Wireless stations have transmission ranges and not all stations are within radio range of each other.

•Simple CS MA will not work!

•C transmits to B.

•If A “senses” the channel, it will not hear C’s transmission and falsely conclude that A can begin a transmission to B.The Exposed Node Problem -

•The inverse problem.

•B wants to send to C and listens to the channel.

•When B hears A’s transmission, B falsely assumes that it cannot send to

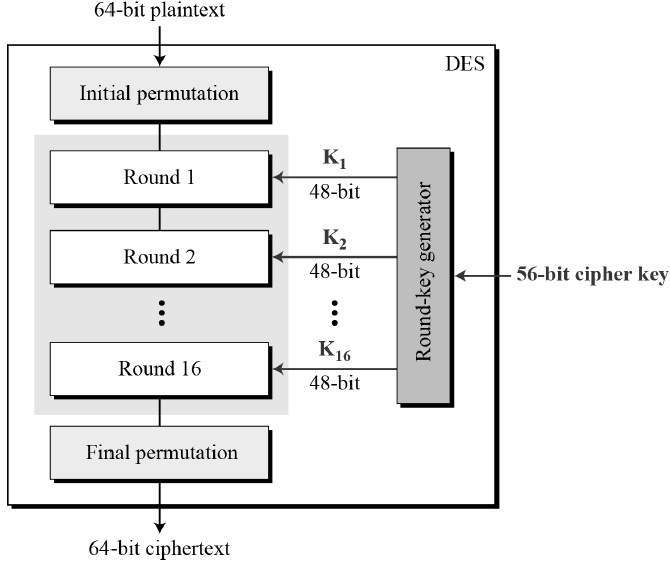

QUE(13)

- The Data Encryption Standard (DES) is a symmetric-key block cipher published by the National Institute of Standards and Technology (NIST).DES is an implementation of a Feistel Cipher. It uses 16 round Feistel structure. The block size is 64-bit. Though, key length is 64-bit, DES has an effective key length of 56 bits, since 8 of the 64 bits of the key are not used by the encryption algorithm (function as check bits only)

- Since DES is based on the Feistel Cipher, all that is required to specify DES is −

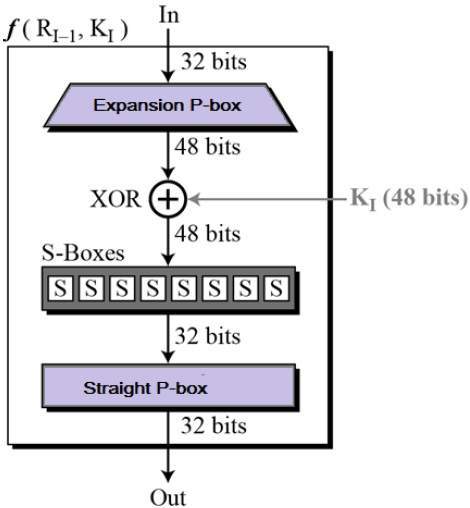

- Round function

- Key schedule

- Any additional processing − Initial and final permutation

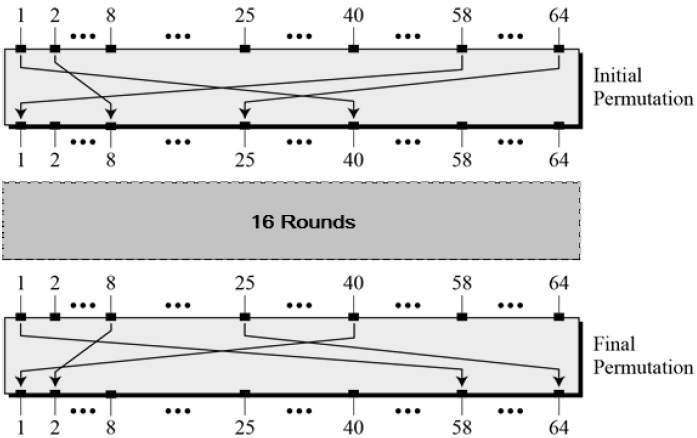

Initial and Final Permutation

Round Function

Key Generation

QUE(12)

ANS--Leaky bucket::

- When the host has to send a packet , packet is thrown in bucket.

- Bucket leaks at constant rate.

- Bursty traffic is converted into uniform traffic by leaky bucket.

- In practice bucket is a finite queue outputs at finite rate.

Token bucket

- In this leaky bucket holds tokens generated at regular intervals of time.

- Bucket has maximum capacity.

- If there is a ready packet , a token is removed from Bucket and packet is send.

- If there is a no token in bucket, packet can not be send.

Main advantage of token Bucket over leaky bucket -

1.If bucket is full in token Bucket , token are discard not packets.

While in leaky bucket , packets are discarded.

2. token Bucket can send Large bursts can faster rate while leaky bucket always sends packets at constant rate.

Implementation of Token bucket

Question(11)

Ans-Bit rate and Baud rate, these two terms are often used in data communication. Bit rate is simply the number of bits (i.e., 0’s and 1’s) transmitted in per unit time. While Baud rate is the number of signal units transmitted per unit time that is needed to represent those bits.Bit rate = baud rate x the number of bit per baud

BIT RATE-

1-Bit rate is the count of bits per sec

2-It determine no of bits travel per sec

3-while the emphasis is on computer efficiency

4-can not determine the bandwidth

BAUD RATE-

1-It is the count signal unit per sec

2-It determine how many times the state of a signal changing

3-Data transmission over the Chanel is more concerned

4-Determine how much bandwidth is required to send the signal

Braid rate=bit rate/the no of bits per signal unit

BANDWIDTH-

Bandwidth and frequency both are the measuring terms of networking.Bandwidth measures the amount of data that can be transmitted in per unit time.

Unit-Bits/sec

While bandwidth is traditionally expressed in bits per second (bps), modern network links have greater capacity, which is typically measured in millions of bits per second (megabits per second, or Mbps) or billions of bits per second (gigabits per second, or Gbps).

Bandwidth connections can besymmetrical.

Quadrature Amplitude Modulation, QAM utilises both amplitude and phase components to provide a form of modulation that is able to provide high levels of spectrum usage efficiency.

Que(9)Modulation scheme for QPSk

The Quadrature Phase Shift Keying (QPSK) is a variation of BPSK, and it is also a Double Side Band Suppressed Carrier (DSBSC) modulation scheme, which sends two bits of digital information at a time, called as bigits.

Modulation scheme for QAM-16

Quadrature Amplitude Modulation, QAM utilises both amplitude and phase components to provide a form of modulation that is able to provide high levels of spectrum usage efficiency.

Ans--

Windowing and Window Size: Window management in TCP is an important concept that ensures reliability in packet delivery as well as reduce the wastage of time in waiting for the acknowledge after each packet.

Window size: window size determines the amount of data that you can transmit before receiving an acknowledgment. Sliding window refers to the fact that the window size is negotiated dynamically during the TCP session.

- Expectational acknowledgment means that the acknowledgment number refers to the octet that isnext expected

- If the source receives no acknowledgment, it knows to retransmit at a slower rate.

The mechanism of the sliding window style may be understood easily with the help of below given

diagrams:

|

| Figure one |

|

| figure two |

Que(10).

Ans---

Silly Window Syndrome (SWS) is a problem that can arise in poor implementations of the transmission control protocol (TCP) when the receiver is only able to accept a few bytes at a time or when the sender transmits data in small segments repeatedly. The resulting number of small packets, or tinygrams, on the network can lead to a significant reduction in network performance and can indicate an overloaded server or a sending application that is limiting throughput.

What Causes Silly Window Syndrome from the Sender Side?

On the sender's side, silly window syndrome can be caused by an application that only generates very small amounts of data to send at a time. Even if the receiver advertises a large window, the default behavior for TCP would be to send each individual small segment instead of buffering the data as it comes in and sending it in one larger segment.

A Common Solution

Nagle's algorithm is one of the most common ways of dealing with silly window syndrome, but the algorithm is still widely misunderstood and requires some tuning and optimization to make it work correctly in most environments. Here's what happens in a TCP transaction when you have Nagle's algorithm turned on:

- The first segment is sent regardless of size.

- Next, if the receiving window and the data to send are at least the maximum segment size (MSS), a full MSS segment is sent.

- Otherwise, if the sender is still waiting on the receiver to acknowledge previously sent data, the sender buffers its data until it receives an acknowledgement and then sends another segment. If there is no unacknowledged data, any available data is sent immediately.

While Nagle's algorithm increases bandwidth efficiency, it impacts latency by introducing a delay since only one segment is sent per round trip time. Applications that require data to be sent immediately usually require Nagle's algorithm to be turned off.

What Causes Silly Window Syndrome from the Receiver-Side?

If the receiver processes data slower than the sender transmits it, eventually the usable window becomes smaller than the maximum segment size (MSS) that the sender is allowed to send. However, since the sender wants to get its data to the receiver as quickly as possible, it immediately sends a smaller packet to match the usable window. As long as the receiver continues to consume data at a slower rate, the usable window, and therefore the transmitted segments, will get smaller and smaller.

Solutions

There are some settings you can tweak to minimize the likelihood of silly window syndrome being caused on the receiver side:

- When the receiver's window size becomes too small, the receiver doesn't advertise its window until enough space opens up in its buffer for it to advertise a maximum-sized segment or until its buffer is at least half empty.

- Instead of sending acknowledgments that contain the updated receive window from above as soon as the window opens up, the sender can delay the acknowledgments. This reduces network congestion since TCP acknowledgments are cumulative, but the delay must be set low enough to avoid the sender timing out and retransmitting segments.

Que(8)

And:

Header format of TCP

This is the layout of TCP headers:

- Source TCP port number (2 bytes)

- Destination TCP port number (2 bytes)

- Sequence number (4 bytes)

- Acknowledgment number (4 bytes)

- TCP data offset (4 bits)

- Reserved data (3 bits)

- Control flags (up to 9 bits)

- Window size (2 bytes)

- TCP checksum (2 bytes)

- Urgent pointer (2 bytes)

- TCP optional data (0-40 bytes)

Que(7)

Ans--::BGP is, quite literally, the protocol that makes the internet work. BGP is short forBorder Gateway Protocol and it is therouting protocol used to route traffic across the internet. Routing Protocols (such as BGP, OSPF, RIP, EIGRP, etc...) are designed to help routers advertise adjacent networks and since the internet is a network of networks, BGP helps to propagate these networks to all BGP Routers across the world.

Within the Internet, an autonomous system (AS) is a network controlled by a single entity typically an Internet Service Provider or a very large organization with independent connections to multiple networks.

Working of BGP::

These Autonomous Systems must have an officially registered autonomous system number (ASN), which they get from their Regional Internet Registry: AFRINIC, ARIN, APNIC, LACNIC or RIPE NCC

Peering

Two routers that have established connection for exchanging BGP information, are referred to as BGP peers. Such BGP peers exchange routing information between them via BGP sessions that run over TCP, which is a reliable, connection oriented & error free protocol.

Selecting the Best Path

Once the BGP Session is established, therouters can advertise a list of network routes that they have access to and will scrutinize them to find the route with the shortest path.

Of course, BGP does not make sense when you are connected only to one other peer (such as your ISP) because he is always going to be the best (and only path) to other networks. However, when you are

Que(6)

Ans

Que(6)

Ans

Ethernet-

- Ethernet is one of the standard LAN technologies used for building wired LANs.

- It is defined under IEEE 802.3.

- Ethernet uses bus topology.

- In bus topology, all the stations are connected to a single half duplex link.

Ethernet uses CSMA / CD as access control method to deal with the collisions

- For Normal Ethernet, operational bandwidth is 10 Mbps.

- For Fast Ethernet, operational bandwidth is 100 Mbps.

- For Gigabit Ethernet, operational bandwidth is 1 Gbps.

Ethernet Frame Format-

. Preamble-

- It is a 7 byte field that contains a pattern of alternating 0’s and 1’s.

- It alerts the stations that a frame is going to start.

- It also enables the sender and receiver to establish bit synchronization.

Start Frame Delimiter (SFD)-

- It is a 1 byte field which is always set to 10101011.

- The last two bits “11” indicate the end of Start Frame Delimiter and marks the beginning of the frame.

Destination Address-

- It is a 6 byte field that contains the MAC address of the destination for which the data is destined.

4. Source Address-

- It is a 6 byte field that contains the MAC address of the source which is sending the data.

5. Length-

- It is a 2 byte field which specifies the length (number of bytes) of the data field.

- This field is required because Ethernet uses variable sized frames.

Limitations of Using Ethernet-

- It can not be used for real time applications.

- Real time applications require the delivery of data within some time limit.

- Ethernet is not reliable because of high probability of collisions.

- High number of collisions may cause a delay in delivering the data to its destination.

- It can not be used for interactive applications.

- Interactive applications like chatting requires the delivery of even very small amount of data.

- Ethernet requires that minimum length of the data must be 46 bytes.

- It can not be used for client server applications.

- Client server applications require that server must be given higher priority than clients.

- Ethernet has no facility to set priorities

Que(7)

Ans

Comments

Post a Comment